Logging MySQL queries to a table

I regularly need to find out which queries are being executed while debugging or investigating application performance. I used to configure MySQL to log into a file and then search the output to see what’s happening.

It’s also possible to log into a table instead of a file. That makes it possible to find logs that satisfy specific criteria using SQL queries.

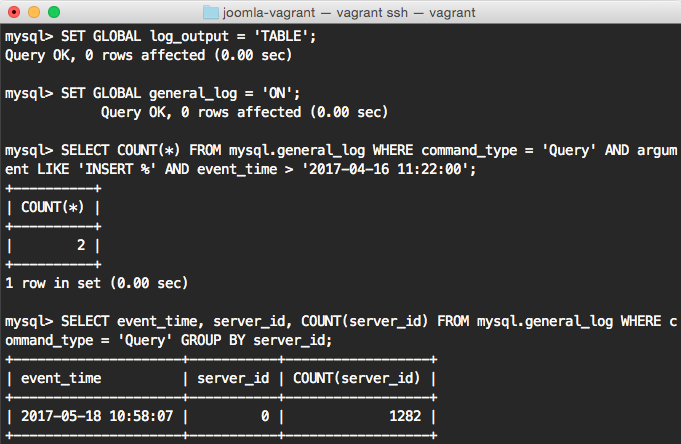

To start sending logs to the table at runtime, enable the general query log and set the output to TABLE:

SET GLOBAL log_output = 'TABLE';

SET GLOBAL general_log = 'ON';That’s it, you’re ready to go! Now run some queries and take a look at the mysql.general_log table:

SELECT * FROM mysql.general_log;The table contains the following columns:

event_time: when the query was run.user_host: the user that ran the query and the host it connected from.thread_id: the connection id.server_id: the identifier of the server when used in replication.command_type: type of command that was run.argument: the full query if command type wasQuery,Execute(orPrepare)

Great! Now we can narrow the search down easily. For example, let’s find all INSERTS:

SELECT * FROM mysql.general_log WHERE command_type = 'Query' AND argument LIKE 'INSERT %';The same logic applies to the slow log as well. All queries that take longer to execute than the threshold defined in the long_query_time global variable will end up in the mysql.slow_log table.

Finally, it’s worth noting that these tables use the CSV storage engine by default. To make your queries a bit more performant, you can convert them to MyISAM and optimize the table structure by adding indexes.

For example, to convert the general log to MyISAM and index the event_time timestamp column:

SET @old_log_state = @@global.general_log;

SET GLOBAL general_log = 'OFF';

ALTER TABLE mysql.general_log ENGINE = MyISAM;

ALTER TABLE mysql.general_log ADD INDEX (event_time);

SET GLOBAL general_log = @old_log_state;Happy logging!